Install Apache Hadoop on Windows

Hadoop is an open-source framework that helps process and store large amounts of data across multiple computers.

It efficiently handles vast, unstructured data by distributing the workload, making it ideal for big data analytics and large-scale web applications.

Hadoop is a go-to tool for organizations looking to manage complex data with ease.

Installation of Apache Hadoop on Windows requires the below essential steps:

- Download the Hadoop zip.

- Unzip and Install Hadoop.

- Setting Up & Verifying Environment Variables.

- Configuring Hadoop.

- Replacing the Bin Folder.

- Testing the Setup.

- Verifying the Installation.

Prerequisites to Install Apache Hadoop on Windows

To install Hadoop on Windows, your machine needs to meet all the below specifications:

- 8GB of RAM is ideal: If you have an SSD, even 4GB could work.

- A quad-core CPU (1.80GHz or faster) is recommended for smooth operation.

- Java Runtime Environment (JRE) 1.8: Make sure you download the offline installer.

- Java Development Kit (JDK) 1.8

- Unzipping Tool (Use 7-Zip or WinRAR)

- Hadoop Zip: This tutorial will use version 2.9.2, but feel free to pick any stable version from the official Hadoop website.)

Complete Guide to Install Hadoop on Windows

Before diving into the detailed steps for Hadoop installation on Windows, it’s essential to ensure that your system is properly prepared.

This guide will walk you through the setup process to get Hadoop up and running for big data tasks.

If you prefer using a virtual private server to handle big data tasks remotely and added flexibility, a reliable Windows VPS can streamline your experience.

Step 1: Unzip and Install Hadoop

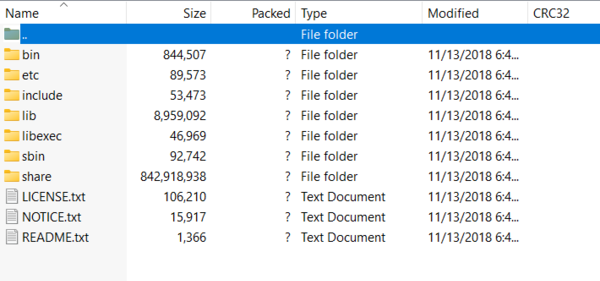

After downloading Hadoop, the first task is unzipping the files. So, start by unzipping the file hadoop-2.9.2.tar.gz.

Inside, you will find another tar file (hadoop-2.9.2.tar), which also needs to be extracted. This double extraction process will give you the actual Hadoop folder.

To keep things organized, you are recommended to create a folder where you will store Hadoop.

Note: Make sure not to use spaces in the folder names, as this can cause issues later on.

Step 2: Setting Up Environment Variables

This step is critical for ensuring your system recognizes where Java and Hadoop are installed.

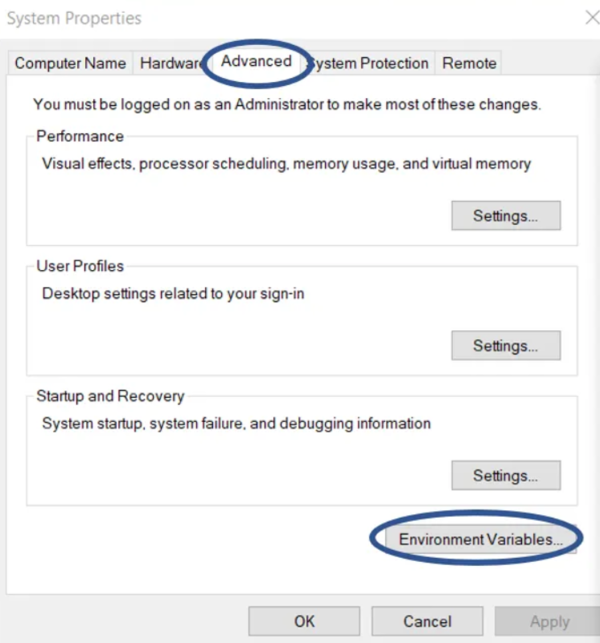

Follow the below path to do this:

- Open the search bar on Windows and type “Environment Variables” to easily find the settings.

- Once inside, click “New” under User Variables.

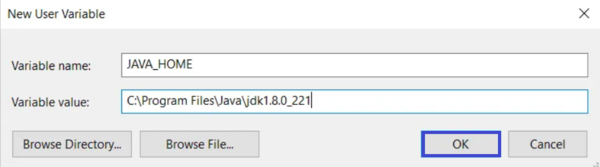

- Enter

JAVA_HOMEas the variable name, and for the variable value, paste the path to your JDK folder. Press OK to save.

- Just like with Java, set

HADOOP_HOME. Create a new variable and set the path to your Hadoop directory. - Lastly, edit the system

Pathvariable so it knows where to find Java and Hadoop commands. - Click on

Path, thenEdit, and add the following three lines one by one:

%JAVA_HOME%\bin

%HADOOP_HOME%\bin

%HADOOP_HOME%\sbin

After adding these paths hit OK, and you are done configuring the environment variables.

Step 3: Verifying Environment Variables

Before proceeding, let’s make sure everything is correctly set up. Open a new command prompt and run the following commands:

echo %JAVA_HOME%

echo %HADOOP_HOME%

echo %PATH%If both commands return the correct paths, you’re good to go!

Step 4: Configuring Hadoop

Now, you are ready to configure Hadoop itself. This part involves creating necessary folders and editing a few configuration files:

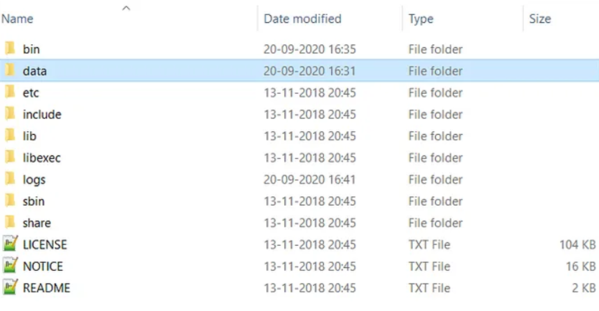

- Inside your Hadoop directory, create a new folder called

data. In thisdatafolder, create two subfolders:namenodeanddatanode. These will store Hadoop’s file system data. The NameNode manages the metadata, while the DataNode stores the actual data blocks.

- Navigate to for e.g:

D:\Hadoop\etc\hadoopand follow these steps to edit Configuration Files. - The below file tells Hadoop where the default filesystem is:

* core-site.xml

* hdfs-site.xml

* mapred-site.xml

* yarn-site.xml

* hadoop-env.cmd- To edit

core-site.xml, open it in a text editor, and add the following code between the<configuration>tags:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>- Editing

hdfs-site.xmlconfigures the storage locations for Hadoop’s NameNode and DataNode:

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>PATH~1\namenode</value>

<final>true</final>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>PATH~2\datanode</value>

<final>true</final>

</property>

</configuration>- For configuring MapReduce, add this to the file:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>- YARN is Hadoop’s resource manager. to edit

yarn-site.xml, add the following lines:

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.auxservices.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<!-- Site specific YARN configuration properties -->

</configuration>- Lastly, you will edit

hadoop-env.cmd. Check that theJAVA_HOMEpath is correctly set inhadoop-env.cmd. If needed, replace the default line with:

set JAVA_HOME=%JAVA_HOME%OR

set JAVA_HOME="C:\Program Files\Java\jdk1.8.0_221"Step 5: Replacing the Bin Folder

Hadoop’s default bin folder might not work smoothly on Windows, so replacing it with a pre-configured one is better.

You’ll need to download the necessary files to replace the bin folder for Hadoop on Windows. You can get the pre-configured bin folder for Hadoop 2.9.2 directly from the GitHub repository.

If you’re looking for the full winutils package, you can access it here on GitHub. Download and extract the files to replace the existing ones in your Hadoop directory for better compatibility with Windows.

Step 6: Testing the Setup

Now it’s time to test if everything’s set up correctly:

- Format the NameNode

In a new command prompt, run:

hadoop namenode -formatThis initializes the NameNode’s storage system. Remember, this command is only necessary the first time.

- Start Hadoop Services

To launch Hadoop, run the following command:

start-all.cmdThis will open several command windows showing different Hadoop daemons running, such as the NameNode, DataNode, ResourceManager, and NodeManager.

Step 7: Verifying the Installation

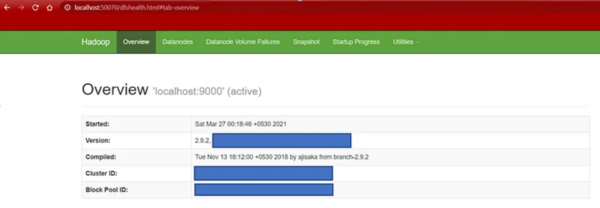

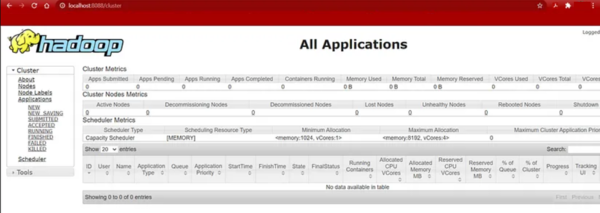

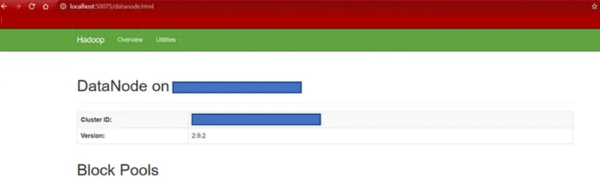

Once the Hadoop installation Window is complete, you can check if Hadoop is up and running, open your browser and visit these URLs:

- NameNode: http://localhost:50070 – This page shows the health of your NameNode.

- ResourceManager: http://localhost:8088 – Here you can view resource usage and job information.

- Datanode: http://localhost:50075 – To verify data storage, open this address in your browser tab.

Can I install Hadoop on Windows 10?

Yes, Hadoop installation on Windows 10 is possible by downloading the Hadoop binaries, setting up environment variables, configuring XML files, and replacing the bin folder with a Windows-compatible version.

Why is Hadoop not working after installation on Windows?

If Hadoop isn’t working after installation, it might be due to improperly configured environment variables, missing or incorrect paths in the core-site.xml and hdfs-site.xml files, or an outdated version of the bin folder.

to solve this issue, ensure you’ve set the paths correctly and replaced the bin folder with a Windows-compatible version.

How to fix ‘Hadoop is not recognized as an internal or external command’?

This error means your environment variables are not set up correctly.

To troubleshoot this error, ensure that the %JAVA_HOME%\bin, %HADOOP_HOME%\bin, and %HADOOP_HOME%\sbin paths are added to the system PATH.

Also, verify the settings by running echo %JAVA_HOME% and echo %HADOOP_HOME% in a new command prompt.

Conclusion

This article is a step-by-step process for successfully setting up apache Hadoop on a Windows machine, from unzipping files and configuring environment variables to editing essential Hadoop configuration files.

After completing the Windows install Hadoop process and running Hadoop, you can start working with it by uploading data, running MapReduce jobs, or exploring YARN for resource management.

With your environment ready, Hadoop can now be utilized for big data processing and analytics tasks on your system.